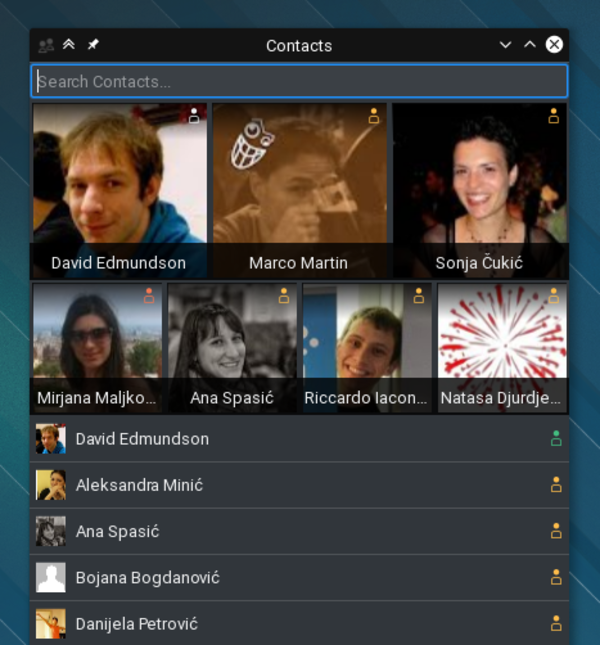

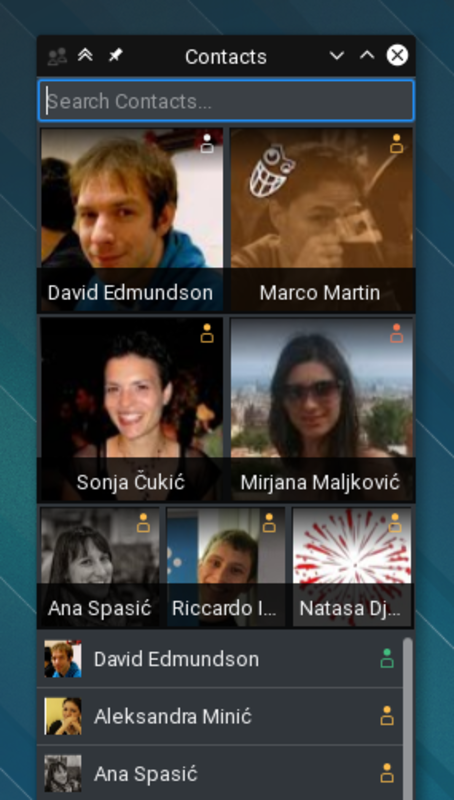

A long time ago, I joked about Raptor mode for

Lancelot. The idea was plausable thanks to a tool I started

developing to make the Lancelot development easier. It was PUCK - the Plasma UI Compiler

(as named by Danny Allen). It generated C++ code from the XML-based UI

definition.

After a while, I stopped working on PUCK since Lancelot’s UI stopped

changing that much. I even removed it from the build system.

Now with QML, the need for a tool like that disappeared.

One thing that I do not like when QML is concerned is its use of

JavaScript.

An alternative

An idea I got some time ago is to create a simple format that

compiles to QML, that would allow the user to write code in any language

that is compilable to JavaScript. I decided to base the format on YAML

(since every language I know of has a parser for it, and it is not XML

:) ).

YAML has its limitations, and some of them are making it a bit

problematic for this use-case, but all in all I think this can work.

PUCK - the new one

Since the old PUCK haven’t been used for a long time, and the new

project is kinda related, I decided to reuse the old name.

To cut a long story short, I’ll just post an example of what the

current syntax looks like. I’ll write more details later (along with a

small manual), when the syntax becomes stable enough. I’m still planning

to make a few small changes that will make PUCK a desirable alternative

to QML not only for the ability to replace JavaScript.

This is an example reimplementing the first part of samegame.qml from

the Qt5 demos (I’m overly lazy to actually reimplement the whole

example).

imports:

- QtQuick 2.0

- QtQuick.Particles 2.0

- ^content/samegame.js as Logic

- ^content

Rectangle:

id: root

width: Settings.screenWidth

height: Settings.screenHeight

properties:

- int acc: 0

functions:

loadPuzzle: |

@coffee

Logic.cleanUp() if game.mode != ""

Logic.startNewGame(gameCanvas, "puzzle",

"levels/level" + acc + ".qml")

nextPuzzle: |

@lang=livescript;global=acc

acc = (acc + 1) % 10

loadPuzzle()

children:

- Timer:

id: gameOverTimer

interval: 1500

running : gameCanvas.gameOver && gameCanvas.mode == "puzzle"

repeat : false

onTriggered: |

@coffee

Logic.cleanUp()

nextPuzzle()

- Image:

source: ^content/gfx/background.png

anchors.fill: parent

Error reporting

One of the great things about the compilation to QML is that you’ll

get script errors during the build of your project and not at the

runtime.

The compilation errors get reported to the console output along with

the code that failed, but also inside the resulting QML as well.

Console output of a failed CoffeeScript function:

Error: Compilation failed

Source:

1 function === without arguments

2

Error message:

[stdin]:1:1: error: reserved word 'function'

function === without arguments

^^^^^^^^

Insides of the QML file:

function error_coffee_function() /*

[stdin]:1:1: error: reserved word 'function'

function === without arguments

^^^^^^^^

*/

Supported languages

Currently, CoffeeScript, LiveScript and (obviously) JavaScript are

supported, but this can be extended in the future. I will probably add

the support for linters and minifiers in the near future.