kdesrc-build is an amazing tool that makes building KDE

projects a breeze.

Now, I like having several build profiles for the projects I’m

working on. The main build done by kdesrc-build is done

with gcc, but I keep also a parallel build with

clang, and some builds that incorporate static analysis

tools and such.

At first, a long time ago, I was doing all this with shell scripts.

But that approach was not really scalable.

Then I wrote a small tool that builds on kdesrc-build,

but allows you to define different build profiles.

This tool has now been updated and moved to KDE’s brand new GitLab

instance at https://invent.kde.org/ivan/kdesrc-build-profiles.

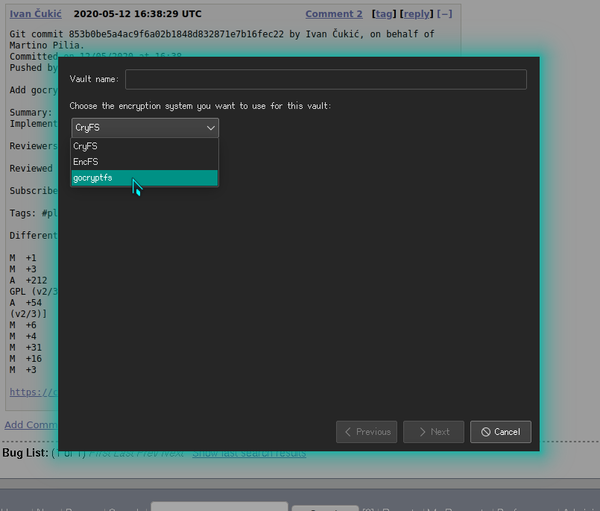

It allows you to create a few profiles, and specify which projects

you want built with each of them. So, for example, you can keep parallel

builds of plasma-workspace with gcc and the

latest clang, while having a static analysis tool being run

on plasma-framework and plasma-vault.

The example configuration file comes with the program. The format is

the same as the one used by kdesrc-buildrc, just with a few

custom fields.

Compilation database

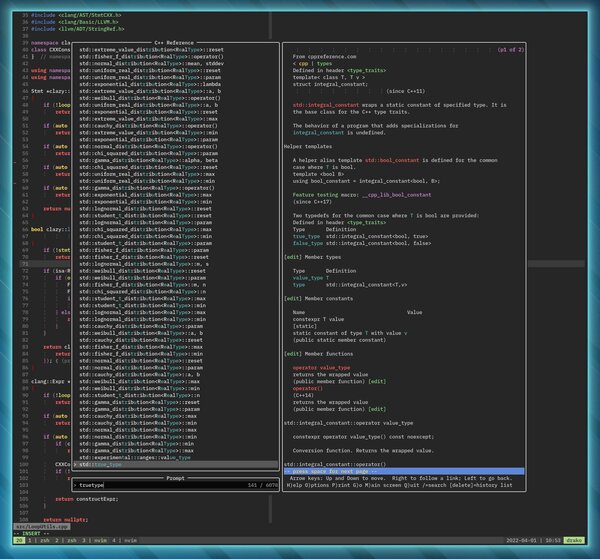

In recent times, quite a few C++ IDEs or editors use

clang behind the scenes to give you code completion, quick

fixes, etc. It is often needed to create the so called compilation

database which contains the compiler flags used to compile your

project in order for the clang integration with your

editor/IDE to work properly.

I found it quite tedious to always have to set this database for all

the projects that I work on.

Because of this, kdesrc-build-profiles has got a new

feature – it can now symlink the compilation database generated by

cmake to the root of your project’s sources where the

editor/IDE will find it easily. You just need to add

symlink-compilation-database yes to the

kdesrc-build-profilesrc file, and run the tool.

Installation

The program is written in Haskell. In order to install it, just

install the stack package manager and do this:

stack update

stack install kdesrc-build-profiles

If you are old-school and use cabal instead of stack,

the installation is similar:

cabal update

cabal install kdesrc-build-profiles

If you want to compile it yourself, the steps are also easy:

git clone https://invent.kde.org/ivan/kdesrc-build-profiles.git

cd kdesrc-build-profiles

stack build

stack install

Usage

To use it, copy the kdesrc-build-profilesrc-example

(remove the -example suffix while doing so) file into the

KF5 sources directory (where you keep kdesrc-buildrc file),

edit it to fit your setup, and run it.

The commands are quite simple. For example, if you want to build all

projects you configured under clang profile, do:

kdesrc-build-profiles clang

If you want to build only plasma-desktop and

plasma-workspace with clang, do this:

kdesrc-build-profiles clang plasma-desktop plasma-workspace

For more commands, use the --help.